VMworld US 2012: Proximal Data, the leading provider of server-side caching solutions for virtualized environments, today announced a technology collaboration with Micron Technology Inc. (Nasdaq: MU), one of the world’s leading providers of advanced enterprise flash storage. Proximal Data’s AutoCache™ fast virtual cache software, when combined with Micron’s P400e SATA and P320h PCIe solid-state drives… Continue reading Innovative Virtual Cache Solution Using SSDs to Increase Virtual Machine Density

Tag: Virtual Machine

NextIO Updates vNET Solution for Streamlined Server I/O Management

NextIO, the pioneer in I/O consolidation and virtualization solutions, today announced significant enhancements to its vNET I/O Maestro solution with the release of nControl Version 2.2. The nControl management software, which is a standard feature in vNET I/O Maestro, includes new functionality to simplify assignment of resources to virtual machines, along with support for VMware… Continue reading NextIO Updates vNET Solution for Streamlined Server I/O Management

Lack of Communication and Cross-Domain Tools Impede Collaboration Needed for VM Deployments

Research Analyst firm Enterprise Management Associates® (EMA™) and Infoblox Inc. today announced results of a recent survey assessing the impact of cross-team collaboration on virtual machine (VM) deployments. The survey results reveal that VM deployments require significant involvement across multiple IT departments, not just the server virtualization team, including datacenter/systems administration (physical infrastructure), networking operations,… Continue reading Lack of Communication and Cross-Domain Tools Impede Collaboration Needed for VM Deployments

PHD Virtual Records Record Revenue in 2011, Virtualization Product Portfolio Expansion

PHD Virtual Technologies, pioneer and innovator in virtual machine backup and recovery, and provider of virtualization monitoring solutions, announced record revenue for fiscal year 2011. PHD Virtual continued its trend of strong quarterly growth, with the fourth quarter of 2011 marking the sixth consecutive quarter of record revenue growth for the company. The company achieved… Continue reading PHD Virtual Records Record Revenue in 2011, Virtualization Product Portfolio Expansion

VMware: Unable to migrate VM, missing snapshot file and out of space Error

The error in the headline is right out of snapshot hell. If you have virtual machines (VMs) with large memory requirements, you probably know that you need extra space on the datastore to store the Memory Swap file (.vswp). When datastore housing the VM runs out of diskspace, you will not be able to create… Continue reading VMware: Unable to migrate VM, missing snapshot file and out of space Error

CiRBA Releases Revolutionary Control Console for Virtual and Cloud Infrastructure

CiRBA Inc., a leader in Data Center Intelligence (DCI) software, today announced the general availability of Version 7.0 of CiRBA DCI-Control, which revolutionizes how organizations control virtual and cloud infrastructure. CiRBA’s new Control Console enables IT organizations to see in a single glance where attention is required at the VM, host, and cluster level, and… Continue reading CiRBA Releases Revolutionary Control Console for Virtual and Cloud Infrastructure

Astute Networks Reduces ViSX G3 for VMware Pricing to Under $20,000 MSRP

Astute Networks®, the innovator of ViSX G3™ for VMware, featuring its patented Data Pump Engine™ technology and award-winning Networked Performance Flash™ architecture, today announced lower pricing that makes ViSX G3 more affordable than ever. Effective immediately, ViSX G3, with 100,000 IOPS of sustained random I/O performance, starts at under $20,000 MSRP. “Our fully leveraged Channels… Continue reading Astute Networks Reduces ViSX G3 for VMware Pricing to Under $20,000 MSRP

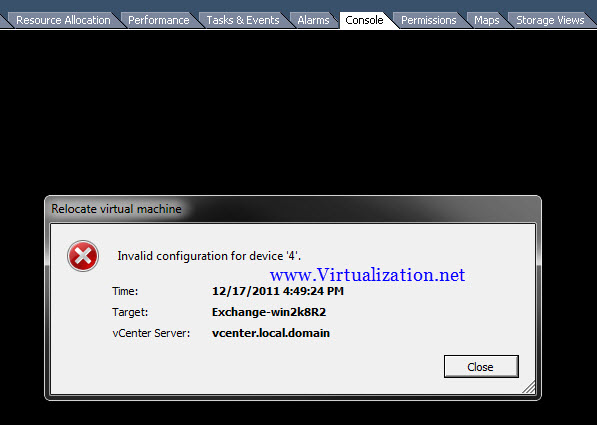

Invalid configuration for device 4 Error When Cloning or vMotion a Virtual Machine

When performing vMotion on a Virtual Machine (VM) or a template on vDS (virtual distributed switch) configuration, you may come across this error: Invalid configuration for Device ‘4’ I experienced this error in my lab over the weekend and removing the VM from the vDS portgroup fixed the issue. According to VMware, the cause of… Continue reading Invalid configuration for device 4 Error When Cloning or vMotion a Virtual Machine